The Format #050

Birthdays, bitter lessons, no more conversations and XXX content (the Musky kind).

Happy Friday! 🌞

It’s a double birthday day. 🥳🥳

The Format has hit the big ol’ half century, which means it’s been 100 weeks since the first issue. WILD.

And it’s my 25th b’day too! 🎂

It’s been another crazy fortnight here at Open Format, and we’ve got some big, big product announcement’s coming soon. EXCITING!

Anyway let’s jump in…

The Bitter Lesson

“The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin”.

This actually isn’t a new essay at all, it’s from (what feels like) decades ago. All the way back in 2022. But with the current state of affairs, where the release of a record breaking AI product seems to be a more regular occurrence than sleep, this beautiful 2 page essay is more true than ever before.

It speaks to a problem many of us builders in the tech (and wider) space are feeling at the moment.

How can we know what to build when the next AI product release may just make all the hardwork and engineering effort we put in become obsolete?

How do we extrapolate forward any reasonable amount of time?

What is the best way to build in this world of rapid acceleration?

The answer is simple. Align yourself, your business model and the way you build with the inevitable rapidly increasing performance of AI models.

Quite simply: build products that make you excited for the next AI model release, not scared of it.

And if you don’t, you have a bitter lesson in store.

The case against conversational interfaces

With the recent(ish) improvements in AI and the LLM (large language model) almost every tech company is battling with the best way to integrate this.

The all too common approach is to hit the user with a blank text box. The UI feature made all too famous by ChatGPT.

But for quite a while I’ve been slightly bemused by this approach. Maybe it’s just me, but I’m a BIG fan of constraints, on the other hand the blank box terrifies me. Unlimited options, so of course I have nothing to ask.

I thought I was the only one thinking this way, then I stumbled across this essay by Julian… he dives into this conversational interface craze way better than I could.

Some of my favourite points that Julian makes:

Natural language is very inefficient, its almost always a huge bottleneck and is much slower than GUI. Don’t replace all the good work we made in improving efficiency with a GUI, instead augment them, add additional inputs.

AI at the OS level is HUGE opportunity and it could be Apple’s best and only chance at winning the AI race.

There is most likely about to be a boom in AI at the browser level. It’s a huge opportunity, and one of the few chances ever to usurp Google from their search throne. The question is who will strike first, OpenAI? Anthropic? Someone else completely?

Put simply, AI and conversational interfaces should compliment current experiences. Definitely not replace them.

Epoch-Based Emissions

I think most people would agree that the current token models within the web3 space are far from perfect.

Some models prioritise distributing the tokens to as many people as possible as quickly as possible → the ‘community-centric’ fair launch. But these often suffer as no one is sufficiently incentivised or empowered to really drive the project to make it into something bigger.

Other projects take a more institutional approach and keep a lot more of the token supply for the team and investors. This centralised approach allows them to move faster, but is, by it’s nature, far more centralised, and makes it harder to build a strong community that have a real sense of ownership.

Either way almost all these options require you to have almost everything figured out pre-launch. A VERY daunting task…

Teams are expected to somehow forecast forward many years and model how the token will behave in unknown market conditions after unknown product developments. Given the nigh on impossible nature of this task, current projects have actually done surprisingly well.

But what if projects actually had a way to review and adjust their token models?

What if they split up this distribution process so that all the decisions didn’t have to be made at the beginning with very little known information.

This essay suggests an epoch-based approach, we’re experimenting with a more real-time approach, controlled by a team of AI agents who have a deep understanding of the community and their goals on an ongoing basis, and adjust the token distribution accordingly.

Other articles + videos we’ve found interesting this week…

xAI has acquired X (Twitter) → Yes, a 2 year old AI company has just acquired the social media behemoth. The Musk Empire. The most important part here, xAI now has unfettered access to the wealth of Twitter’s proprietary data. It’s xAI’s edge over the other top AI models. 🚀

Grok’s Million Dollar Moment is Loading → Yes, X again… This time it’s because @grok, the official X AI agent has claimed $690,102 in fees. How? It used another AI agent called @bankrbot to create a token called $DRB. Now it’s earning rewards for every trade that happens with $DRB. (A robot is going to be a millionaire before me… no fair…) 🤖

Elon Musk’s Grok AI lands on Telegram, gaining access to over 1 billion users → XXX. Okay I promise this is the last one about Twitter. This time it’s about how Musk has decided to distribute Grok. With ChatGPT, Claude and most of the other AI models only allowing you to access the models directly through their website, Grok took a different approach.

You can chat to it directly through Twitter, and now Telegram. Meeting the users where they’re at. The only other model rivalling Grok’s distribution channels is Llama via Zuck’s social media triopoly. ❌

Agents as NFT’s → This post makes the very interesting argument that by treating agents as NFT’s (rather than giving the wallets and treating them as just another user) you can let them have some rather interesting features…

Possession. The holder of the NFT will have the exclusive right to access the agent’s memories.

Improvements. The agent’s memory will update every time the NFT holder uses the agent, gaining holder-specific experience. The agent will also remember the refinements of the holder.

Provenance. Because the agent can only be used by its NFT holder, a record of past holders will indicate its experience, functioning like a “resume.”

Apply for Retroactive Funding for Your Neighborhood Open Source Project → Using web3 to reward hyper-local neighborhood builders for being awesome and helping to create public infrastructure. Now this is a problem we can 100% get behind. Especially in light of today’s challenges facing local communities. Giving these sorts of communities the recognition and tools they need to thrive is at the core of our mission too. 🌞

We should talk less about public goods funding and more about open source funding → Another incredibly interesting exploratory essay from Vitalik Buterin, the co-founder of Ethereum. The takeaway? For digitial public goods there is absolutely no reason why they shouldn’t just be referred to as ‘open source’ projects. It’s a far less loaded phrase and would increase funding.

Open source should not mean "it's equally virtuous to build whatever as long as it's open source"; it should be about building and open-sourcing things that are maximally valuable to humanity.

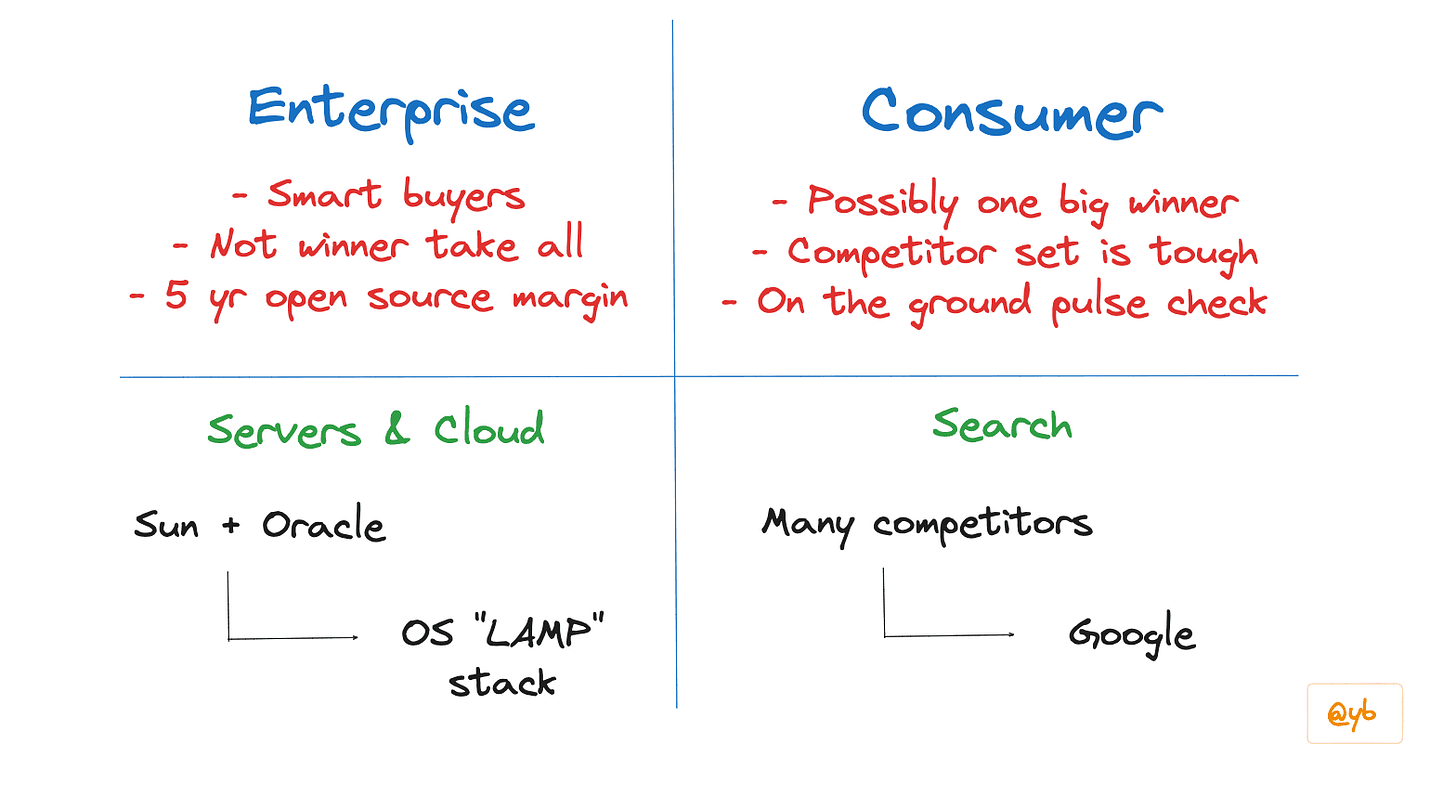

Gurley's Sun-Oracle analogy for Enterprise AI → This post dives into the battle we are currently seeing play out between open source and closed source AI models. Looking back through history this isn’t the first time we’ve seen this. We saw it with Linux vs Unix at the OS level and in search too.

The probable outcome? Open source will win at the enterprise level because for businesses margins matter (big time), but at the consumer level there may be one (closed source) winner and with the current state of mindshare, that could well be ChatGPT.

What we’ve been up to…

Andy shared our plan for Phase 2: Transitioning from Rewards to Economies in the most recent episode of Happy Hacking.

Andy and Sarah shared how we are changing the future of web3 communites at Near’s Town Hall, with over 5,000 viewers and counting, this was a super exciting opportunity to share what we’ve been building.

Matchain and MatchID have shared how hyped they are to be partnered with us

That’s all for this week.

If you want more news from us, you can also sign up to our builders newsletter/ community via our website!

Have a great weekend, Dan and the OPENFORMAT team 👋🏽

Join our community

Join our Telegram group, The Format, for relevant news and conversations.

Check out our website (feedback much appreciated!!)

Join our Discord to get all the real-time updates on what is being built with Open Format

Subscribe to our YouTube → we are currently running a build series where we are streaming the whole process as we build our new product in public

Image created with GPT-4o: “Create image of the Twitter bird and the letter X landing in Telegram and in local neighbourhoods” (VERY creative prompt, I know…)