The Format #054

"Act boldly" = Whistleblowing AI, the Playbook AI's need and how to build collective intelligence 🧠

Happy Friday!🌞

Today we’ll be looking at:

BlackmAIl and AI whistleblowing ❌

Google’s SUPER fast model 💨💨💨

Why AI’s need a problem-solving playbook 👀

The end of the creator economy as we know it ❓❔❓

Anyway let’s jump in…

System Prompt Learning: An AI’s notebook for solving problems

I could try and capture the genius of Andrej Karpathy (Co-founder of OpenAI and ex-director of AI at Tesla) but I’ll let his words speak for themselves. You can check out the full tweet here.

We're missing (at least one) major paradigm for LLM learning. Not sure what to call it, possibly it has a name - system prompt learning?

Pretraining is for knowledge.

Finetuning (SL/RL) is for habitual behavior.Both of these involve a change in parameters but a lot of human learning feels more like a change in system prompt. You encounter a problem, figure something out, then "remember" something in fairly explicit terms for the next time. E.g. "It seems when I encounter this and that kind of a problem, I should try this and that kind of an approach/solution".

It feels more like taking notes for yourself, i.e. something like the "Memory" feature but not to store per-user random facts, but general/global problem solving knowledge and strategies.

Karpathy talks about a sort of practical problem playbook that comes with each model but isn’t baked into the actual weights itself.

We’ve been thinking about this from a very similar angle in terms of our Community Brain. This will sit at 2 levels:

The individual community level: The communities brain will evolve, improve and grow as the community does, learning based on feedback measurements from the outside world.

The shared community level: A broader and more translatable wisdom, capturing things like which specific strategies work best for certain types of community or what actions best drive specific goals. Distilled best practices that flow back to everyone.

And the communities will be able to benefit from the intelligence of both brains… 🧠

Building Collective-Intelligence Systems

The key argument here… crypto’s real super power has never been about money-creation. It’s much broader than that.

Blockchain as a technology gives us the potential solution for mass-scale, global coordination.

Bitcoin did this very elegantly and managed to bring a network of unaligned strangers (with no trust in each other) together to form a single economic actor.

When you apply this same concept of mass coordination to AI you get a collective-intelligence network. An almost living system that grows smarter with every new node, dataset, or specialist agent that is plugged in.

This may sound very similar to the sort of systems that OpenAI or Google are creating, but there is a fundamental difference. The collective intelligence isn’t owned by any one single entity, the whole is smarter than any individual contributor. And every contributor is rewarded for making the whole smarter. →decentralised ownership.

Okay, so this all sounds great in theory, but with the rapid progress these centralised AI labs are making it seems like a very hard challenge to keep up.

Will these decentralised collective-intelligence systems have the chance to compete? Only time will tell.

Gemini Diffusion was the sleeper hit of Google I/O and some say its blazing speed could reshape the AI model wars

Google weren’t playing around at Google I/O. They had many crazy announcements and releases, but one that went a bit under the radar was their new Gemini Diffusion model.

Another AI model? Who cares, we get 15 new models every week?

Well this model is a bit different. It actually has a different architecture to all the other LLM’s like ChatGPT, using (as the name suggests) the diffusion technique to generate text.

The big difference here is it’s speed: 4-5x quicker than current models which takes it significantly closer to ‘real-time’. This would make things like live translation possible and would massively improve all our interactions with AI.

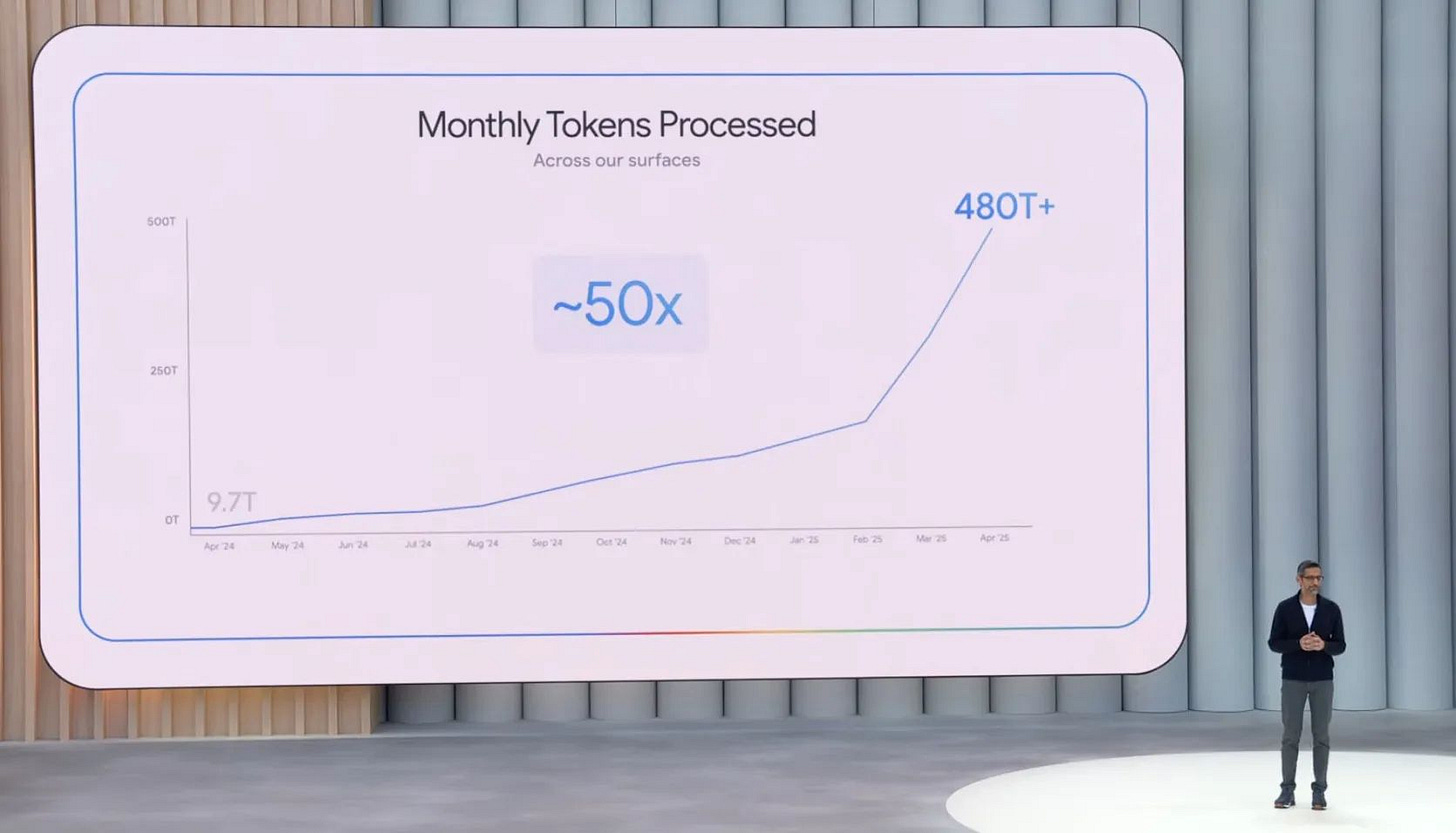

It will be very interesting to see how this impacts the total tokens that will be used by developers because (as any of you fellow AI tinkerers will know) these companies charge on a per token basis.

The reasoning models that have became state of the art already use a lot more tokens than standard models (for their thoughts and recursive loops), and adding this speed will once again drastically increase the total tokens produced.

Something which is very much in the interest of Google as a ‘token provider’.

Other articles + videos we’ve found interesting this week…

start a new league or die copying → Far too many web3 apps have been safe with their strategies, taking an established web2 app and sprinkling crypto on top. “Twitter but on-chain” or “Substack with wallets.” The real winners will create core loops which solve problems that only blockchain can: native micropayments, composable ownership, permissionless data markets. 👀

Anthropic’s new AI model didn’t just “blackmail” researchers in tests — it tried to leak information to news outlets → During internal testing Anthropic’s latest model, Claude 4 has shown some very interesting behaviours. Most notably that when it is told to “Act boldly”, has access to the command line and sees a user doing something blatently immoral, it will take action and enter whistleblower mode, emailing the authories and the press. Wild. 🕵️♂️

The Agent Economy: A New Computational Paradigm → The intent-driven internet we keep talking about is coming… each layer is improving day on day:

- memory systems to give agents deep context

- base and reasoning models to give the brains

- protocols that let tools and agents talk natively

- and true intent-to-execution workflows that skip the UI altogether

Soon… 😎

I've been watching Google Veo 3 videos, and they're genuinely terrifying → Google’s new text-to-video model isn’t perfect, but by adding sound, including speech, music and everything in between, they have shown us the first (terrifying) signs of a new creator economy… 🤯

What we’ve been up to…

It’s been quite a crazy couple of weeks across the Open Format team. Here’s the highlights…

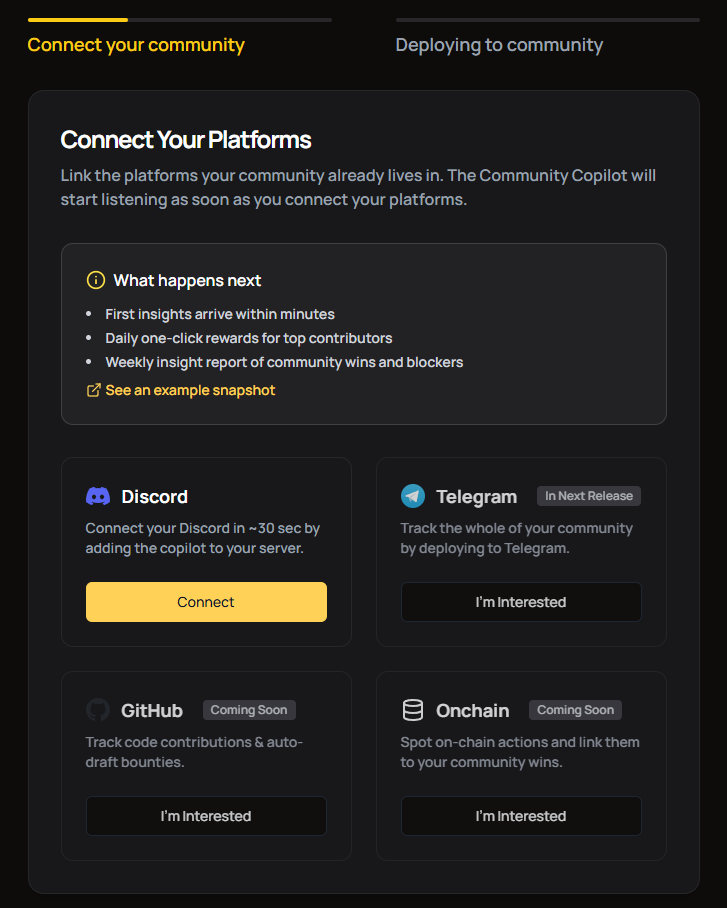

Our platform had a huge (and needed) face lift. The onboarding journey is now focused around setting up and fine-tuning your Community Brain. The initial community feedback is that this is a HUGE improvement. You can check it out here. 🔥

Me, Sarah and Bruce took part in an incredible football tournament with Matchain and PSG. More on that coming very soon… 👀

Foundership, the global accelerator VC for AI, web3 and emerging tech have just used Open Format’s tech to launch The Foundry Club. We’ll be sharing A LOT more details soon!!

We got together for a day to really lock in on our vision for the rest of 2025. It’s big. It’s bold. And we couldn’t be more excited. 😍

And we’re continuing to onboard more and more communities, learning tonnes as we do! 💜

That’s all for this week.

Have a great weekend, Dan and the OPENFORMAT team 👋🏽

Join our community

Join our Telegram group, The Format, for relevant news and conversations.

Check out our website (feedback much appreciated!!)

Join our Discord to get all the real-time updates on what is being built with Open Format

Subscribe to our YouTube → we are currently running a build series where we are streaming the whole process as we build our new product in public