The Format #056

How to build a memory, the Great Differentiation and the world of Context Engineering. 🧪

Happy Friday! 🌞

So as a rule of thumb, whenever I write the Format I never really plan out what topics I want to cover. I just read, and watch and digest, then pick the most interesting things.

Sometimes a theme emerges.

Sometimes it’s all just a jumble of random news.

This week though, it feels like the theme is actually very clear.

It’s all about memory, and how effectively designing memory is THE key to getting the most out of AI.

Even with Grok 4 being released, supposedly the best model yet, memory feels so much more important.

But who knows maybe this is just my subconscious product brain kicking in, since we’re deep in memory land for our CommunityOS, and we’re seeing just how powerful this can be for communities.

Anyway, less Dan rambling.

Let’s jump in…

Context Engineering for Agents 👀

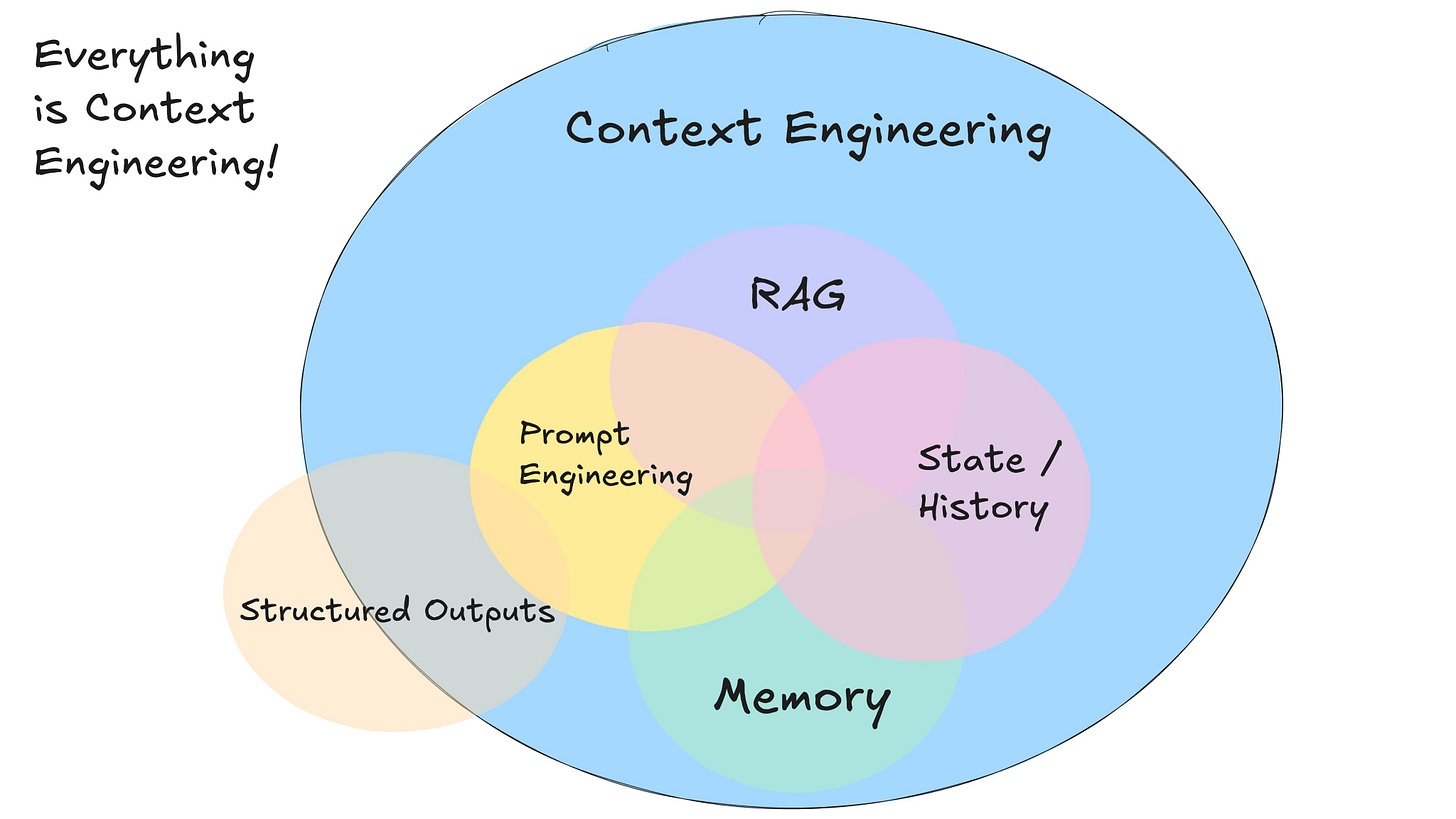

For the longest time, the hard - and often very tedious - task of curating and refining the context that an LLM has before it answers a question or makes a query was referred to as prompt engineering.

The problem was ‘prompt engineering’ as a concept became a bit of a meme. The term became associated with banks of templates that could squeeze something extra out of the model, word tricks, quirks and complex written structures.

But when building something like our CommunityOS, prompt engineering took a different form.

It was all about bringing the right information or context to the AI model at the right time. Things like selecting the right memories from previous conversations to include, pulling in a relevant community goal that may have been mentioned 3 weeks ago, including the name and stats for your 3 community moderators etc.

It’s about wrapping AI in a sophisticated memory system, something that is definitely no easy feat.

Well good news, the industry seems to have landed on another phrase to capture the true value of this process: ‘context engineering’.

“The art and science of filling the context window with just the right information at each step of an agent’s trajectory”

This post by Langchain is a 101 on context engineering: the different types of memories, the various ways to manage your context and practically, the different frameworks you can use to implement all this in your project.

Andrej Karpathy: Software Is Changing (Again)

In a recent talk he delivered for Y Combinator, Andrej Karparthy (my absolute favourite educator on AI) talked about how AI has changed how we need to think about and design the UI and UX of products.

I know what you’re thinking. We’ve all heard this being discussed countless times…

This talk felt different though. It really landed with me.

Especially 2 specific concepts that he discussed…

The Autonomy Slider

Andrej talks about how all the best AI apps that are being built at the moment very cleverly allow you to self-select the amount of autonomy you want to give the AI agent within the system.

You should be able to change this autonomy level at any point, and actually it may vary many times within a single session.

We aren’t talking about a literal slider UI component here (although that would be fun), it’s more about baking this into the experience.

Take Cursor for example, the AI developer coding platform. Cursor allows you to select small chunks of code and use AI to only edit this specific chunk, or you can allow AI to edit a whole file, or get this, you can even allow AI to edit the whole codebase.

The Verification, Generation Loop

Another very interesting concept he dived into was partial autonomy, and the fact that almost all AI apps should still have a human in the loop.

Almost all interactions with AI, whether your generating text, images, videos or code, contain the same loop.

AI generates something.

Humans verify it.

Repeat.

This is an epic loop, but it’s a loop with a huge bottleneck. Us. Humans.

He therefore suggests that a huge part of building an AI app should actually be focused not on the AI or the generation, but on the verification process.

If you make this easy and fast, you win.

Yet again Cursor is a great example. They make it very clear which code has been edited and allow you to very easily navigate through the codebase and use keyboard shortcuts to accept or reject changes.

Other articles + videos we’ve found interesting this week…

The Great Differentiation → The crux of this post by Packy McCormick is that current technology is turning us into ‘infinite copy machines’. Every website looks the same, every pitch feels the same. Success is in differentiation, and differentiation in a way that can’t be copied. Or at least can’t be copied well.

Replit Collaborates with Microsoft to bring Vibe Coding to Enterprise Customers → Vibe coding, the process where anyone, even non-devs, can bring a product to life using the power of prompting, has just reached the enterprise level. This partnership could result in a wave of ‘mini apps’ vibed with the goal of patching day-to-day workflow gaps inside their companies. 💪

Books we’re reading this summer 2025 → Reading the right book at the right time can completely flip your perspective and cause you to rethink everything. A16z know this well, so they’ve pulled together a very impressive range of books to read over summer: F1 and sci-fi all the way to the Renaissance.

Thanks to this list I’m now reading a book on Octopus intelligence… 🐙

+1 for "context engineering" over "prompt engineering" → Yet another post about how ‘prompt engineering’ over trivialises something that is actually very complex. Providing an LLM with the right context for it to perform optimally is both a complex engineering task, and an art…

If you’re interested in the topic of AI and memory here are a few more pieces we found interesting for you to dig into…

Some thoughts on human-AI relationships

Beyond AI's Blackbox: Building Technology That Serves Humanity

Why Centralized AI Is Not Our Inevitable Future

What we’ve been up to…

(As always) it’s been quite a hectic few weeks:

EthCC → Last week the majority of the team ventured out to Cannes for EthCC, which was A LOT of fun. We got to spend a tonne of time talking to different projects in the web3 and AI space, deepening our relationships with our partners and (most importantly) aligning as a team in the beautiful French sun!

Since getting back it’s been all hands on deck helping to onboard all the communities who we spoke too last week. Very exciting!!

👋 Welcome Leo! → Leo joined our team a couple of weeks ago as an intern and he’s been absolutely smashing it! He’s jumped right in to the deep end and is working on all sorts, including curating our Community Agents AI knowledge base. 👀

What we’ve been up to June ‘25 → Just in case you missed it we dropped a company update covering everything that we’ve been working on.

New website, new partnerships, product updates, experiments, it’s all in there. 💪

And that’s all for this week.

Have a great weekend, Dan and the OPENFORMAT team 👋🏽

Join our community

Join our Telegram group, The Format, for relevant news and conversations.

Check out our website (feedback much appreciated!!)

Join our Discord to get all the real-time updates on what is being built with Open Format

Subscribe to our YouTube → we are currently running a build series where we are streaming the whole process as we build our new product in public